Sparse Matrix Multiplication Torch

By popular demand the function torchmatmul performs matrix multiplications if both arguments are 2D and computes their dot product if both arguments are 1D. This implementation extends torchsparsemm function to support torchsparsemmsparse_matrix1 sparse_matrix2 Resolves 20988 for CPUCUDA.

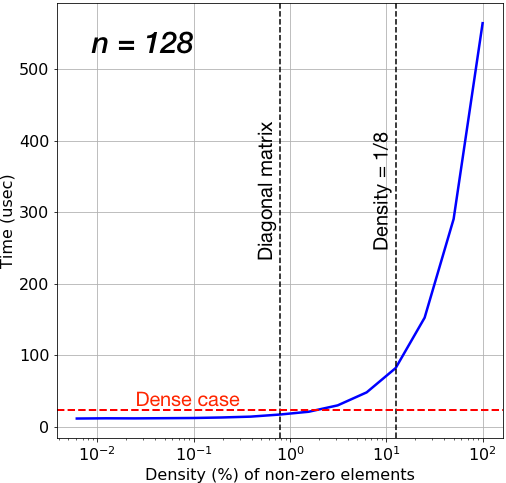

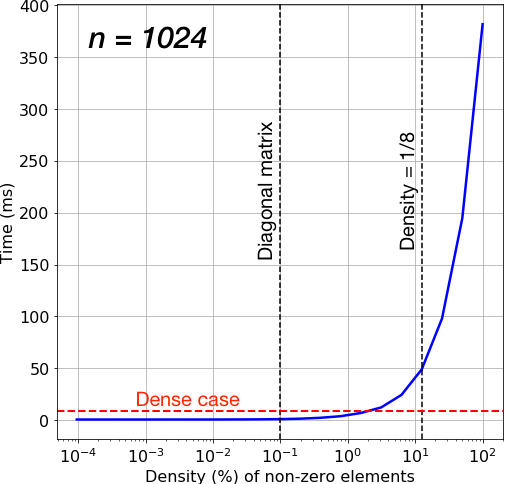

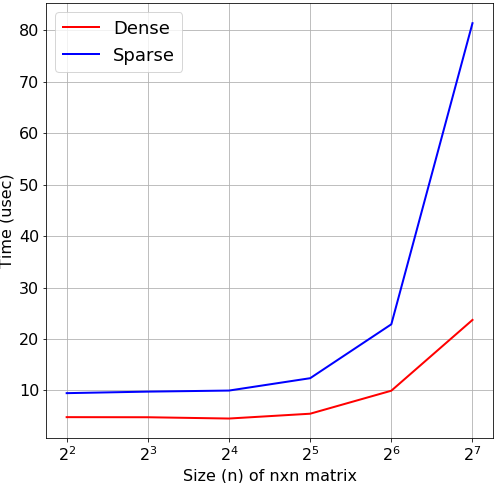

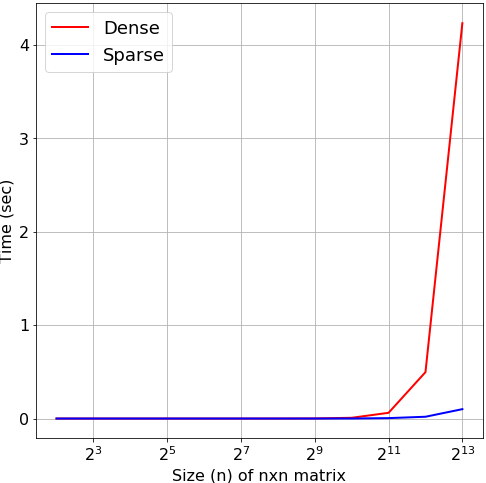

Sparse Matrices In Pytorch This Article Will Analyze Runtimes Of By Sourya Dey Towards Data Science

The result should consist of three sparse matrices one obtained by adding the two input matrices one by multiplying the two matrices and one obtained by transpose of the first matrix.

Sparse matrix multiplication torch. This formulation allows to leverage dedicated and fast sparse-matrix multiplication implementations. Sparse Sparse Matrix Multiplication All included operations work on varying data types and are implemented both for CPU and GPU. Performs a matrix multiplication of the sparse matrix mat1 and the sparse or strided matrix mat2.

In PyTorch Geometric 160 we officially introduce better support for sparse-matrix multiplication GNNs resulting in a lower memory footprint and a faster execution time. D torchones 34 dtypetorchint64 torchsparsemm SD sparse by dense multiplication tensor 3 3. For inputs of such dimensions its behaviour is the same as npdot.

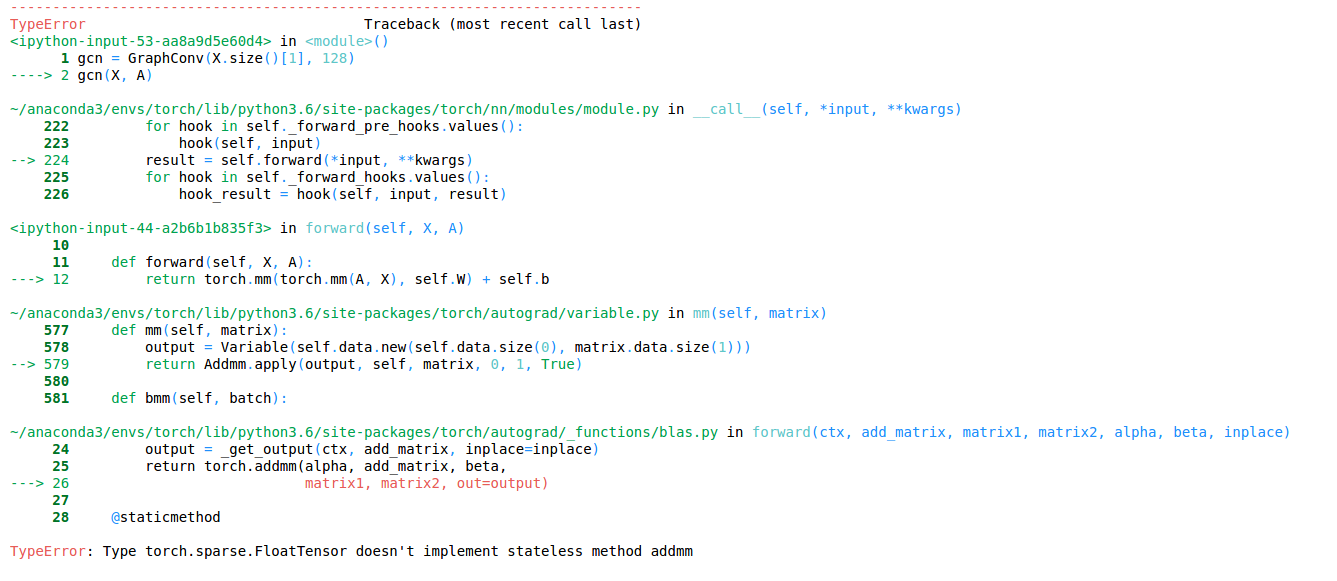

Note that other entries of matrices will be zero as matrices are sparse. Sparse Sparse Matrix Multiplication torch_sparsespspmm indexA valueA indexB valueB m k n - torchLongTensor torchTensor Matrix product of two sparse tensors. SelfA torchautogradVariable random_sparse n dim selfw torchautogradVariable torchTensor nprandomnormal 01 dimdim My PyTorch version is 0112_2 - would greatly appreciate possible solutions.

Fast Block Sparse Matrices for Pytorch This PyTorch extension provides a drop-in replacement for torchnnLinear using block sparse matrices instead of dense ones. This means the density of the sparse matrix is 1 n n rows. Fast Sparse Matrix Multiplication 3 1969 was the first to show that the naıve algorithm is not optimal giving an On281 algorithm for the problem.

More information on the fascinat-. This includes some functions identical to regular mathematical functions such as mm for multiplying a sparse matrix with a dense matrix. Both input sparse matrices need to be coalesced use the coalesced attribute to force.

The sparse case is multiplying a diagonal matrix with a dense matrix. To avoid the hazzle of creating torchsparse_coo_tensor this package defines operations on sparse tensors by simply passing index and value tensors as arguments with same shapes as defined in PyTorch. Generalized sparse matrix-matrix multiplication SpG- EMM is the key computing kernel for many algorithms such as compressed deep neural networks triangle counting Markov clustering searching algorithms and matching algorithms.

The cur-rently fastest matrix multiplication algorithm with a complexity of On238 was obtained by Coppersmith and Winograd 1990. This function does exact same thing as torchaddmm in the forward except that it supports backward for sparse matrix mat1. Similar to torchmm If mat1 is a n times m tensor mat2 is a m times p tensor out will be a.

The dense case is multiplying 2 dense matrices however both dense matrices are randomly generated unlike Part 1 where one dense matrix was simply the densified version of the sparse matrix. Matrix multiplies a sparse tensor mat1 with a dense tensor mat2 then adds the sparse tensor input to the result. Matrix product of two tensors.

If you do want to apply a NumPy function to these matrices first check if SciPy has its own implementation for the given sparse matrix class or convert the sparse matrix to a NumPy array eg using the toarray method of the class first before applying the method. If both tensors are 1-dimensional the dot product scalar is returned. INTRODUCTION Sparse-sparse matrix-matrix multiplication SpGEMM is a key computational primitive in many important application do-mains such as graph analytics machine learning and scientific computation.

For example Harwell Boeing used for sparse matrices and Matrix Market used for both sparse and dense matrices. Pytorch has the torchsparse API for dealing with sparse matrices. The behavior depends on the dimensionality of the tensors as follows.

Torchmatmulinput other outNone Tensor. An embedding layer is just a doman specific name for a sparse-dense matrix-matrix multiplication where the sparse matrix has 1-hot rows. Many improvements then followed.

To avoid the hazzle of creating torchsparse_coo_tensor this package defines operations on sparse tensors by simply passing index and value tensors as arguments with same shapes as defined in PyTorch. Sparse Sparse Matrix Multiplication All included operations work on varying data types and are implemented both for CPU and GPU. Sparse matmul CPUCUDA C implementation unittests update torchsparsemm documentation autograd support The CPU sparse-sparse matmul was implemented taking as a reference this work Sparse Matrix Multiplication Package SMMP.

Generalized Sparse-Matrix Dense-Matrix Multiplication functions. It also lets you do broadcasting or matrix x matrix matrix x vector and vector x vector operations in batches. If both arguments are 2-dimensional the matrix-matrix product is returned.

Index Termssparse matrix multiplication sparse formats spatial hardware I. If the first argument is 1-dimensional and the second argument is 2-dimensional a 1 is prepended to its dimension for the purpose of the matrix multiply. More concretely SpGEMM is a building block.

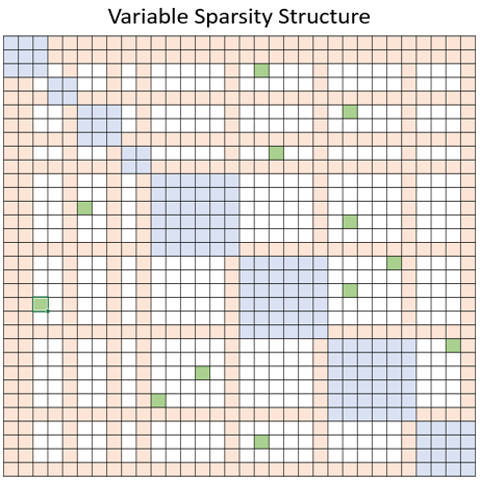

Performs a matrix multiplication of the sparse matrix mat1 and dense matrix mat2. It enables very easy experimentation with sparse matrices since you can directly replace Linear layers in your model with sparse ones. X MLP 1 ϵ X A X where A denotes a sparse adjacency matrix of shape num_nodes num_nodes.

3 Execution Of The Split 3d Spgemm Algorithm For Sparse Matrix Matrix Download Scientific Diagram

Deepspeed Sparse Attention Deepspeed

Bigbird Or Sparse Self Attention How To Implement A Sparse Matrix Stack Overflow

Sparse Matrix Multiplication Is Too Slow Issue 16187 Pytorch Pytorch Github

Sparse X Dense Dense Matrix Multiplication Pytorch Forums

How Can I Implement The Dot Product Torch Mul Of A Dense Matrix And A Sparse Matrix Issue 1091 Rusty1s Pytorch Geometric Github

Sparse Matrices In Pytorch This Article Will Analyze Runtimes Of By Sourya Dey Towards Data Science

Stable Softmax For Sparse Matrices Peterbloem Nl

Csr And Dcsr Formats Download Scientific Diagram

Sparse Matrices In Pytorch This Article Will Analyze Runtimes Of By Sourya Dey Towards Data Science

Bigbird Or Sparse Self Attention How To Implement A Sparse Matrix Stack Overflow

Feature Request Sparse X Sparse Sparse Issue 1550 Pytorch Pytorch Github

Sparse Matrices In Pytorch This Article Will Analyze Runtimes Of By Sourya Dey Towards Data Science

Https Arxiv Org Pdf 2007 03179

Stable Softmax For Sparse Matrices Peterbloem Nl

Batch Matmul With Sparse Matrix Dense Vector Issue 14489 Pytorch Pytorch Github