Matrix Multiplication Benchmark

There are 6 ways to set it. I changed everything to incorporate floats and now there is a problem.

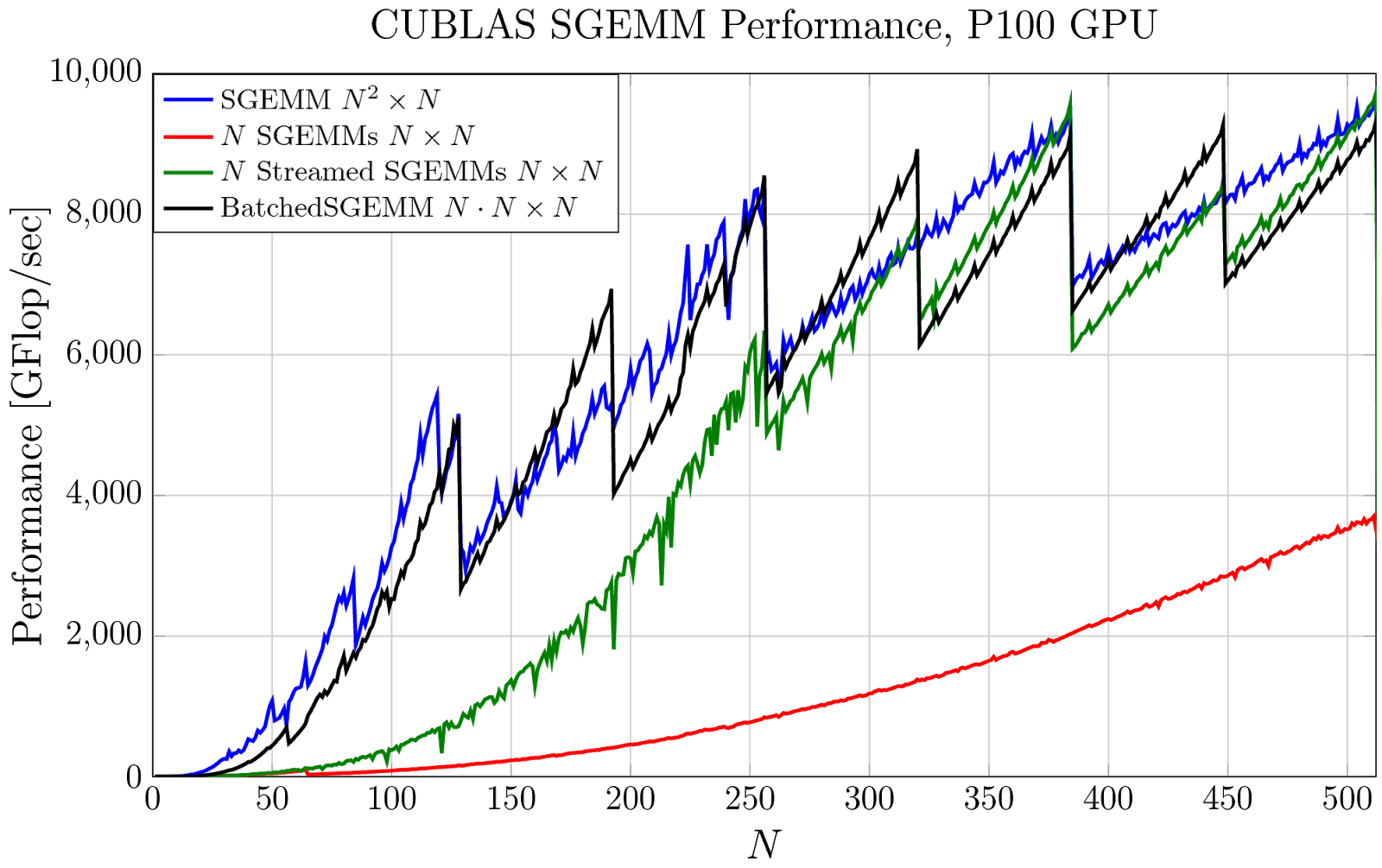

Pro Tip Cublas Strided Batched Matrix Multiply Nvidia Developer Blog

Im trying to show my boss how the GPU improves matrix multiplication by a great amount.

Matrix multiplication benchmark. Ive tested this 2 multiplication functions overloaded and free. Depending on what I set BLOCK_SIZE the results become unpredictable. Import tensorflow as tf.

You can use Strassen algorithm of running time On281 for large square matrix multiplication which is around 10x faster than the native multiplication which runs in On3. MATMUL can do this for a variety of matrix sizes and for different arithmetics real complex double precision integer even logical There are many algorithms built in including the simple triple DO loop actually not so simple. MATMULis a FORTRAN77 program which compares various methods for computing the matrix product.

It does not use other more efficient algorithms such as the Strassen algorithm or the Coppersmith-Winograd. MATMUL a C program which compares various methods for computing the matrix product. Second obtained through our benchmark tests with 10231025 and 20472049 dimensional square matrix multiplications are shown in Fig7.

Mat4 opMulin mat4 _m. I am trying to find out how long a matrix multiplication takes on different processors. Benchmark Tests for TD-Based Matrix Multiplication The computational time unit.

A Matrix Multiplication Benchmark. I found in the blog of Martin Thoma a benchmark between python vs java vs c for matrix multiplication using the naive algorithm. I found this link which gave me some basic numbers for 16x16 timing.

Since normal matrix multiplication is an O n³ time algorithm with O n² output elements a reasonable hypothesis could be that those times increase linearly with the size. Heath Department of Computer Science and Center of Simulation of Advanced Rockets University of Illinois at Urbana-Champaign Abstract Sparse matrix-vector multiplication SpMxV is one of the most important computational kernels in scientific computing. A B C.

This program performs matrix multiplication on various sized square matrices using the standard approach. 1 Matlab performance. Results in microseconds per output element.

In the programming guide I coded in the matrix multiplication without shared memory access for integers and it worked perfectly. Here I will try to extend this benchmark by creating a python module in c and calling that module from python. A Matrix Multiplication Benchmark.

Ive created a simple 4x4 Matrix struct and have made some tests with surprising results. The matrix multiplication function was selected as a benchmark because of the abundance of matrix operations in DSP applications. For example Im.

A B C. Improving Performance of Sparse Matrix-Vector Multiplication Ali Pınar Michael T. MATMULcan do this for a variety of matrix sizes and for different arithmetics real complex double precision integer even logical.

Size baseline 64 089 128. All together you can have a c implementation faster than the matlabs one. Tic for i1100 cab.

In these figures we can see each seconds of DD TD and QD Strassen matrix multiplication. A B C. TMS320C6748 Other Parts Discussed in Thread.

MATMULcan do this for a variety of matrix sizes and for different arithmetics real complex double precision integer even logical. Time 143 - 07 ms with 10 runs performed arand10001000. X nparray nprandomrandn size size dtype npfloat32.

MATMULis a FORTRAN90 program which compares various methods for computing the matrix product. Mat4 mulin mat4 m1 in mat4 m2. Cuda Matrtix Multiplication Benchmark.

Since there is very little data dependency this function is. Cronin 4 years ago. Anatomy of High-Performance Matrix Multiplication 3 0 200 400 600 800 1000 1200 1400 1600 1800 2000 0 1 2 3 4 5 6 7 mn GFLOPSsec Pentium4 36 GHz dgemm GOTO dgemm MKL dgemm ATLAS 0 200 400 600 800 1000 1200 1400 1600 1800 2000 0 2 3 4 5 6 7 mn Power 5 19 GHz dgemm GOTO dgemm ESSL dgemm ATLAS 0 200 400 600 800 1000 1200 1400 1600 1800.

Import numpy as np. Also SSEAVX can help you to get around 8-20x faster for code execution. The function multiplies two 4x4 matricies a and b and stores the result in a product matrix.

Proper tensorflow benchmark Youll find execution times match or are better than GPU skcuda on a Tesla K80. End toc100 2 Python performance timeit adotboutc. A Matrix Multiplication Benchmark.

Charles Ung92 Intellectual 440 points Part Number. Although Ive heard from similar results in c struct mat4 float44 m.

Sparse Matrix Vector Multiplication Benchmark

Matrix Vector Multiplication In Cuda Benchmarking Performance Stack Overflow

Matrix Multiply An Overview Sciencedirect Topics

Matrix Matrix Multiplication Parallelized With Openmp Download Scientific Diagram

How To Speed Up Matrix Multiplication In C Stack Overflow

Benchmarking Python Vs C Using Blas And Numpy Stack Overflow

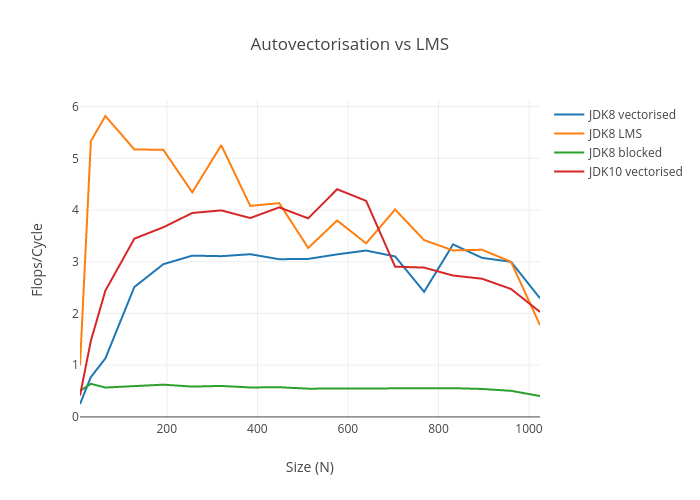

Matrix Multiplication Revisited Richard Startin S Blog

Performance Of Sequential Vs Parallel Matrix Multiplication Using Download Scientific Diagram

Benchmarking Blas Libraries Blas Basic Linear Algebra Subroutines By Assaad Moawad Datathings Medium

Matrix Multiplication Background User Guide Nvidia Deep Learning Performance Documentation

Why Is Matrix Multiplication Faster With Numpy Than With Ctypes In Python Stack Overflow

Matrix Multiplication Sequential Vs Parallel Performance Test Stack Overflow

Is Eigen Slow At Multiplying Small Matrices Stack Overflow

Opencl Matrix Multiplication Sgemm Tutorial

Comparison Of Our Matrix Multiplication Against Cublas Clblas Download Scientific Diagram

Matrix Multiplication Strassen Vs Standard Stack Overflow

Matrix Vector Multiplication In Cuda Benchmarking Performance Stack Overflow